What Vapi users actually want

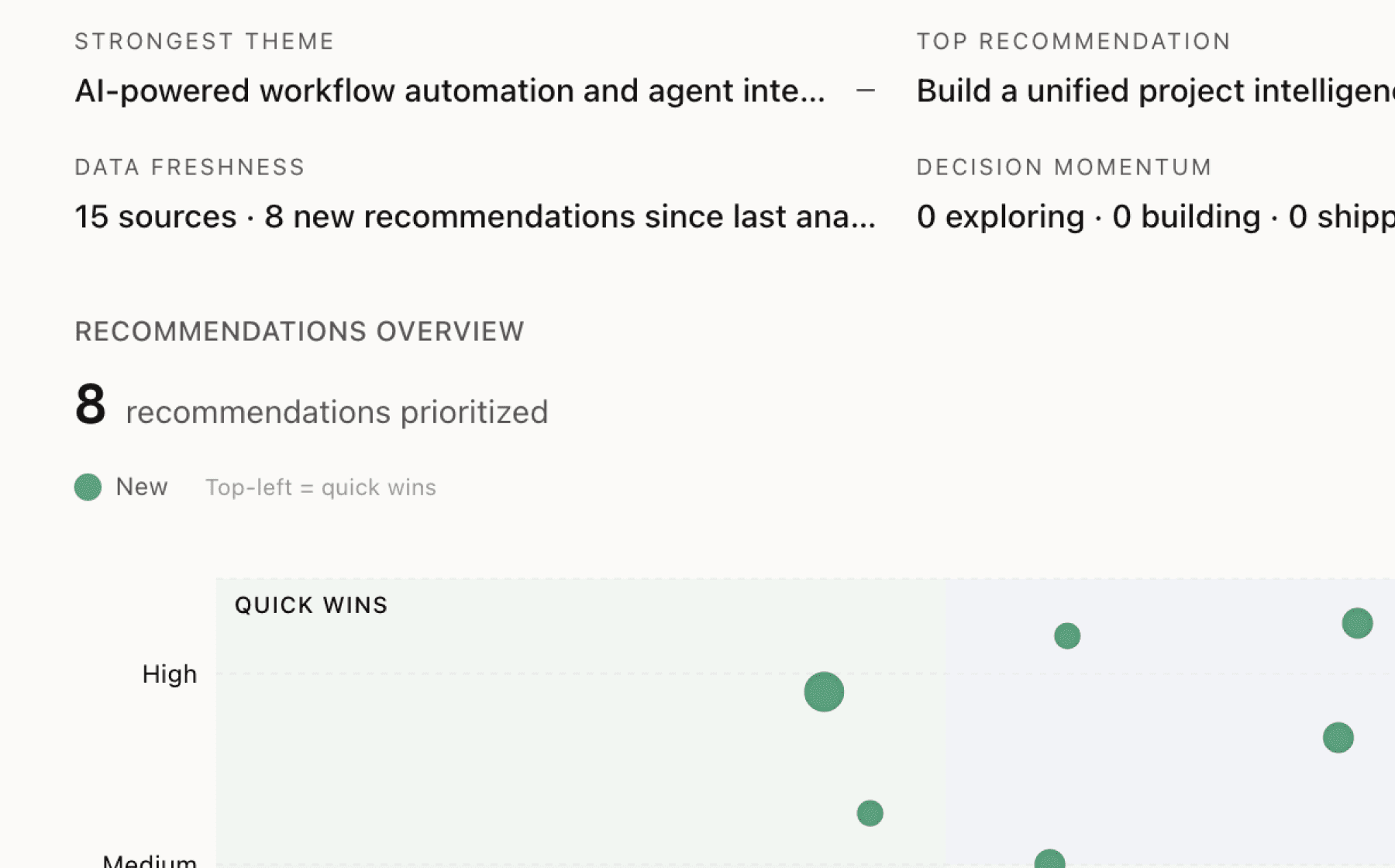

Mimir analyzed 15 public sources — app reviews, Reddit threads, forum posts — and surfaced 15 patterns with 8 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Build a production readiness checklist with automated pre-deployment testing for latency, hallucination detection, and load tolerance

High impact · Medium effort

Rationale

Developers are moving voice AI from testing to production but lack objective frameworks to assess readiness. The data shows that sub-3-second latency is non-negotiable for natural conversations, yet without proper testing developers are flying blind on model performance. Evidence shows AI voice assistants generate hallucinations despite well-crafted prompts, and lab performance means nothing if systems crash under traffic spikes.

Vapi processes 300M+ calls and handles 400K+ daily inbound calls, but the support system reports high load, indicating strain under real-world pressure. Developers need confidence their deployments will maintain sub-500ms latency and 99.99% uptime before going live. A structured pre-deployment checklist would directly address the critical gap between development and production.

This recommendation combines automated testing with SUPERB benchmarks and hallucination detection frameworks already referenced in the research. Implementing this reduces production incidents, accelerates time-to-market for developers, and directly supports the primary metric of user engagement by preventing post-deployment failures that drive churn.

Projected impact

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

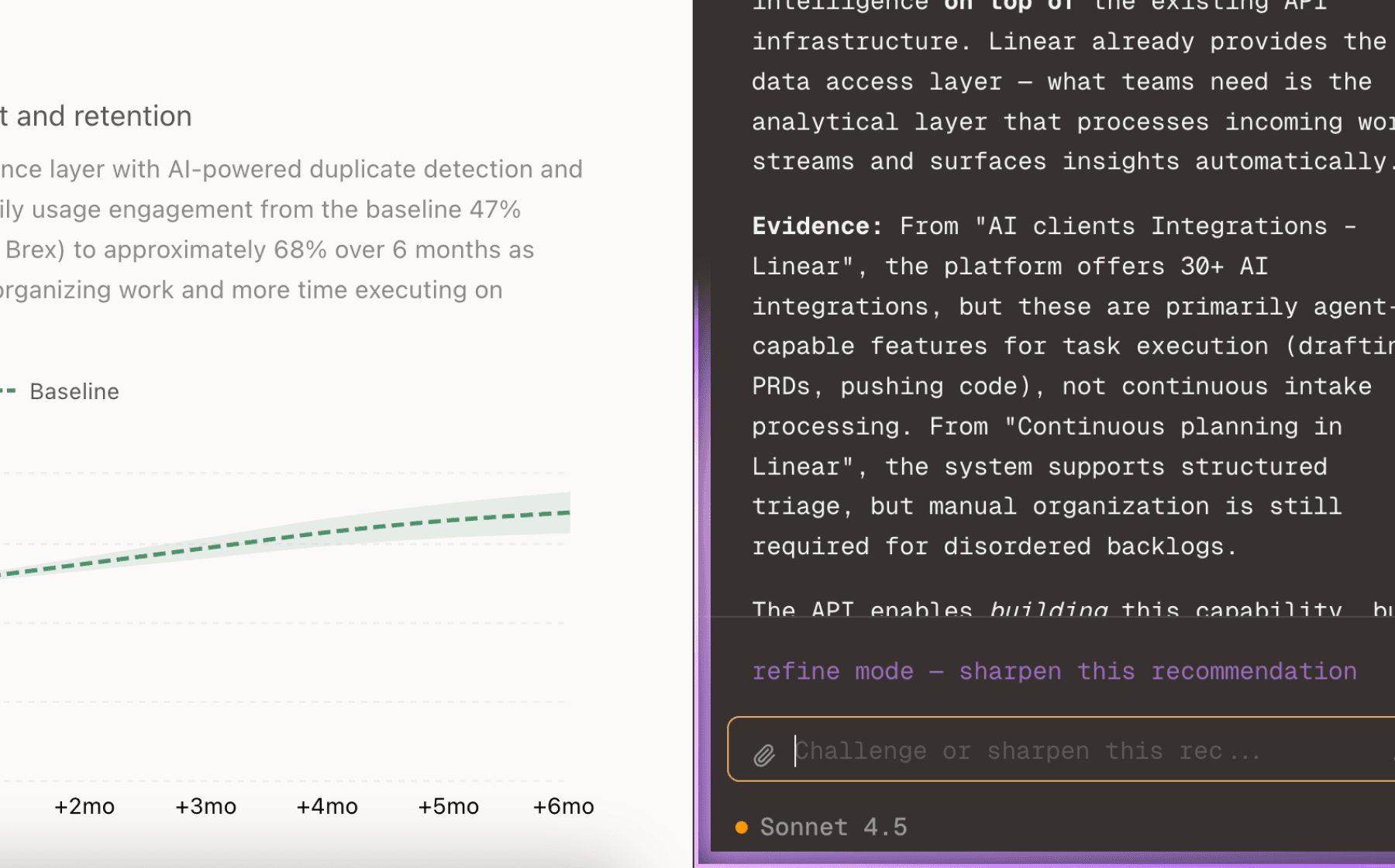

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

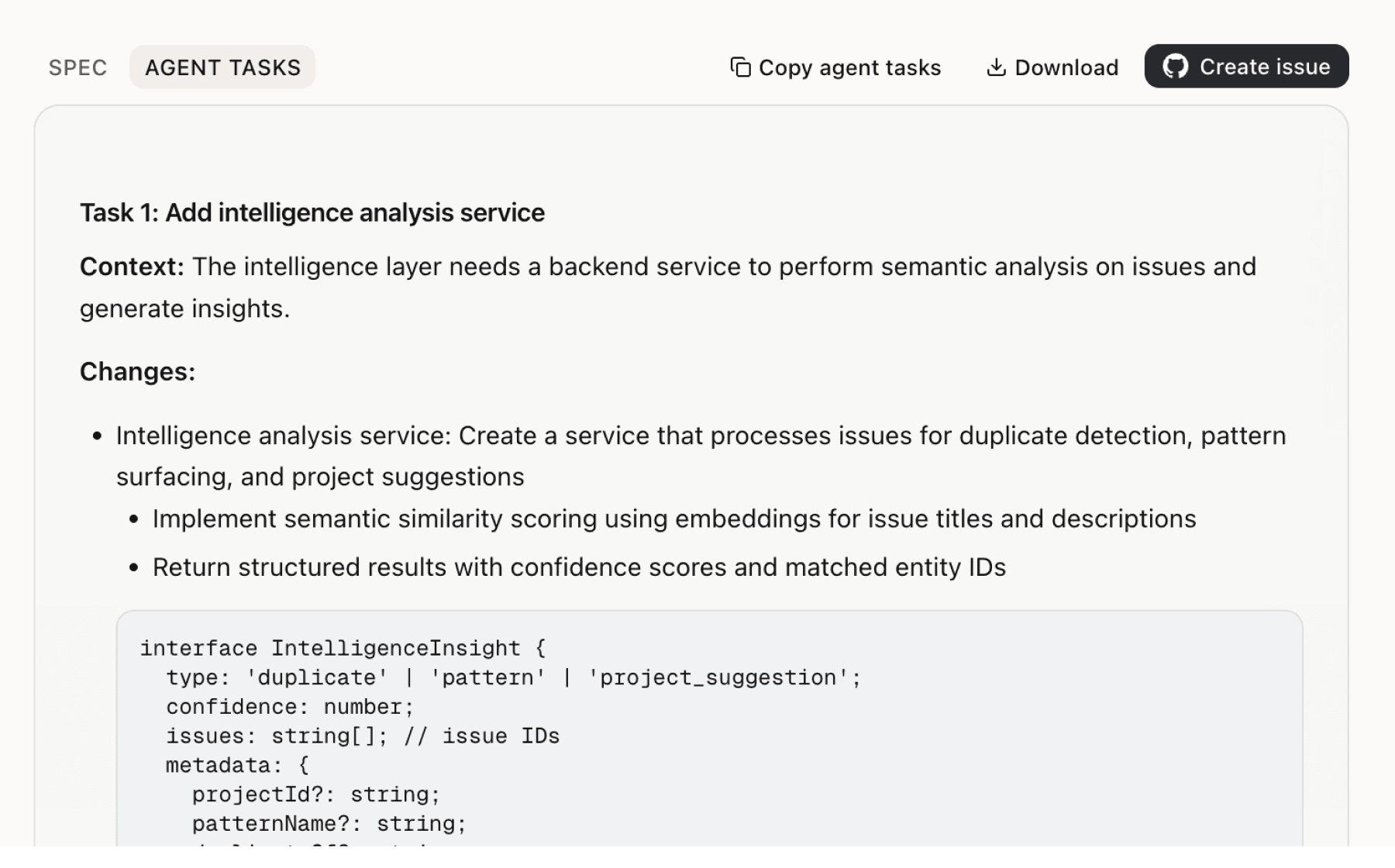

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

7 additional recommendations generated from the same analysis

Developers struggle to choose between competing AI models without clear guidance on tradeoffs. The research shows Vapi publishes extensive comparison content covering multiple providers, but developers still need help selecting appropriate models for their specific voice applications. This friction directly impacts time-to-productivity and adoption rates among the target audience of product managers, founders, and engineering leads.

Integration complexity is a major barrier to adoption. Developers need seamless connections with legacy systems requiring DTMF support, CRM platforms, and workflow automation tools like n8n, Make.com, and Go High Level. The research shows native DTMF closes workflow gaps so agents can run autonomously from dial to hang-up, but pre-built templates for common service providers are only planned, not delivered.

Developers lack visibility into production voice AI behavior, making debugging and optimization difficult. The research shows access to call logs and transcripts is important for monitoring AI performance, but support infrastructure is under high load, indicating reactive rather than proactive issue detection. Advanced monitoring tools that detect issues early and prevent escalation are explicitly highlighted as a demand signal.

Voice agent use cases have expanded across real estate, healthcare, car dealerships, and e-commerce, but each developer starts from scratch. The research shows specific industry pain points—real estate agents qualifying leads, car dealerships handling thousands of calls, e-commerce businesses recovering abandoned carts at a 70 percent rate. Tutorial content demonstrates building agents for these use cases, indicating demand but revealing implementation barriers.

Creating effective voice assistants requires extensive testing of scenarios and edge cases, but developers lack objective measures of conversation quality. The research shows that without proper testing developers cannot objectively assess model performance, and AI hallucination detection is critical for production reliability. Virtual assistants report that improved speech patterns make conversations feel less mechanical and more engaging, but measuring this improvement remains subjective.

Educational content and tutorials are extensive, but developers learn best by experiencing working implementations. The research shows Vapi has a library of live conversations demonstrating use cases, and conference sessions will be recorded for post-event access. Multiple independent creators produce tutorial content, indicating strong interest in hands-on learning, but static tutorials don't capture the nuance of real-time voice interactions.

Vapi's API-first approach is a key differentiator, but developers face friction translating configurations into production code. The research highlights that the API-first approach enables custom stacks and tool integration, with 40+ integrations and bring-your-own-models capability. Tutorials demonstrate comprehensive workflows from agent creation through deployment, but each developer manually translates visual configurations into API calls.

Insights

Themes and patterns synthesized from customer feedback

Voice agents enable emerging applications across real estate, healthcare, coding, and web development, with enterprise adoption growing from hobbyists to Fortune 500 companies. High adoption metrics (2.5M+ assistants, 120K-500K users) and strong competitive positioning demonstrate market traction and product-market fit.

“Vapi AI is being used for coding, app designing, and web development applications”

Glow-TTS and similar solutions must balance speed and quality for production deployments while managing implementation complexity and resource constraints. TTS solutions need zero external dependencies and efficient resource management for high-volume workloads.

“Glow-TTS offers practical balance of speed and quality for production text-to-speech applications without requiring external aligners.”

Developers need access to call logs, transcripts, real-time performance insights, and proactive issue detection to monitor AI behavior and optimize applications. Advanced monitoring tools help prevent escalation and maintain platform reliability at scale.

“Access to call logs and transcripts for monitoring AI performance and call history”

Effective prompt engineering, emotional prompting techniques, and function call integration are critical for building high-performing AI voice assistants. Developers require guidance on optimization strategies across diverse use cases.

“Prompt engineering is the most critical aspect of building effective AI voice assistants”

Voice AI represents a fundamental shift away from keyboard/screen interfaces toward natural speech interaction. This epochal market opportunity requires developer education on adoption strategies and positioning.

“VapiCon 2025 will be held in San Francisco with exact venue details to be announced”

Vapi invests in comparisons, tutorials, newsletters, conferences, and live conversations targeting product managers, founders, and engineering leads. Educational resources and hands-on engineering support reduce time-to-productivity and drive adoption across target user segments.

“Focus on developer education through comparisons: Mistral vs Llama, Claude vs ChatGPT, Deepgram Nova versions, ElevenLabs alternatives”

Voice agents drive high-value business outcomes including 24/7 lead qualification, appointment scheduling, CRM integration, and sales reporting—reducing manual work from months to minutes. These capabilities address critical pain points for product managers and founders, directly enabling engagement and revenue impact.

“Unqualified leads and time-consuming processes slow down sales teams”

Developers need seamless integration with legacy systems (DTMF support), CRM platforms, and business tools (Make.com, Twilio, n8n, Go High Level) to embed voice agents into existing workflows. Pre-built templates and standardized integrations accelerate implementation and reduce technical barriers.

“Accessibility needs must be met to include all potential clients in sales processes”

Developers value Vapi's API-first approach enabling custom stacks, tool integration, and function calling for data fetching. The platform supports 40+ integrations and bring-your-own-models capability, allowing customization for specific use cases.

“Platform supports 40+ integrations including OpenAI, Anthropic, Deepgram, 11Labs, AWS, Salesforce, HubSpot, and Zendesk”

Developers struggle to select appropriate AI models (LLMs, STT, TTS) for voice applications. Vapi provides comparison content and educational resources, but explicit guidance on model tradeoffs and selection criteria remains a critical need to support informed decision-making.

“Vapi publishes extensive comparison content covering multiple AI models for voice applications (LLMs, STT, TTS providers)”

Support for 100+ languages enables voice AI deployment across diverse international markets and regions. Multilingual capability expands addressable use cases and reduces language barriers for global developers and end-users.

“Vapi supports over 100 languages, demonstrating multilingual capability.”

SOC2, HIPAA, and PCI compliance are essential for enterprise adoption in regulated industries. Security certifications and data protection capabilities enable deployment of voice AI in sensitive contexts while maintaining customer trust.

“Platform is SOC2, HIPAA, and PCI compliant to protect sensitive customer and transaction data”

Sub-3-second latency is essential for natural voice interactions, requiring comprehensive testing frameworks (hallucination detection, SUPERB benchmarks) and guardrails to prevent misinformation. Voice models must maintain engaging, human-like conversations with predictable performance under real-world conditions.

“Thorough testing is crucial for voice applications to ensure natural conversations and reliable user experiences.”

Developers require guaranteed uptime (99.99%), sub-500ms latency, and real-time responsiveness to safely deploy voice AI in production. Vapi's infrastructure processes 300M+ calls and 400K+ daily inbound calls, but must maintain performance under traffic spikes to prevent user churn and support enterprise adoption.

“300M+ calls processed on Vapi platform”

Vapi's API-first design with pre-made templates and BYOM flexibility enables developers to deploy in days instead of months, directly reducing friction and improving user engagement. The platform saves hundreds of engineering hours through rapid onboarding and fast deployment cycles.

“API-native platform with thousands of configurations and integrations for maximum flexibility”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.