What The Prompting Company users actually want

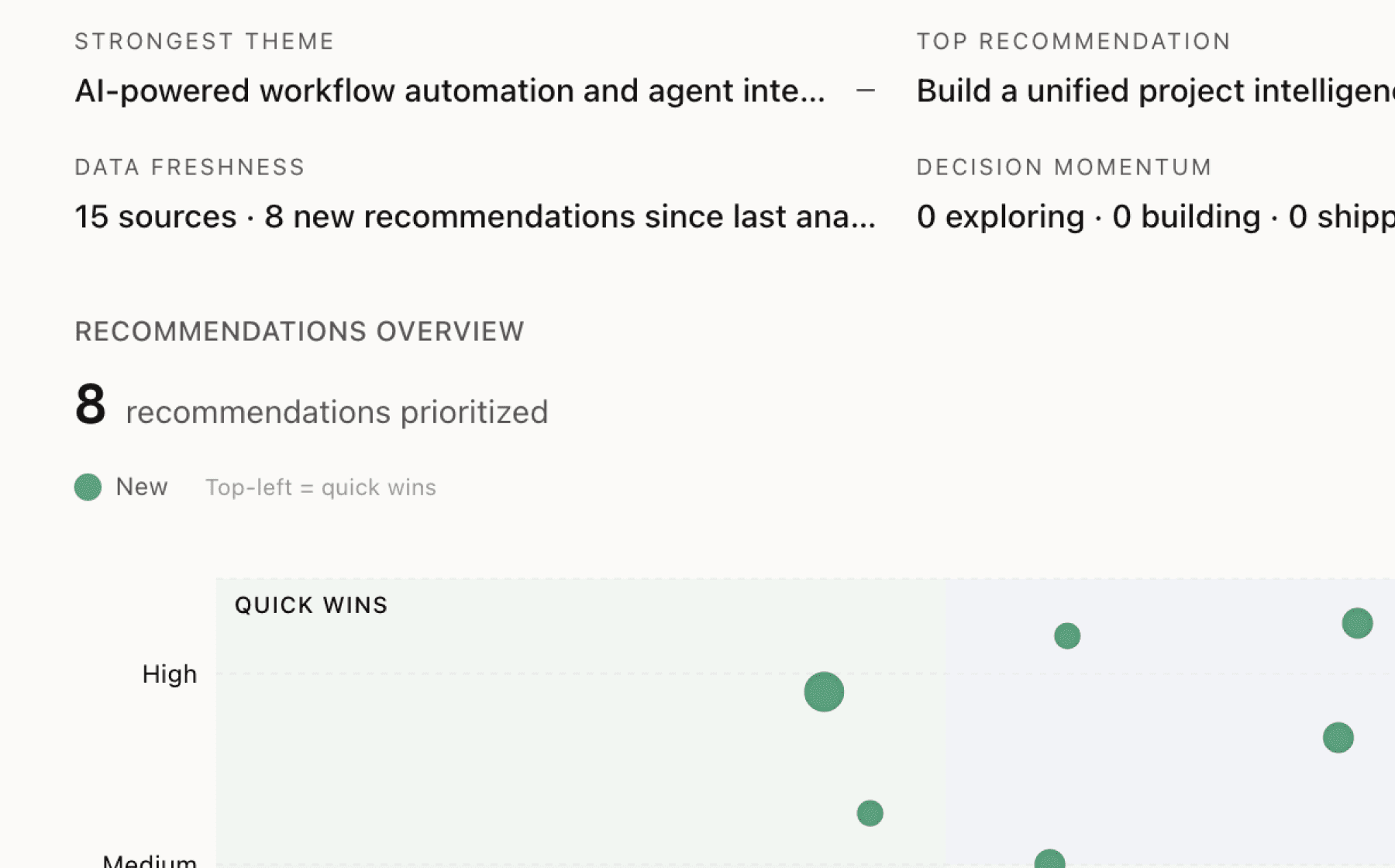

Mimir analyzed 15 public sources — app reviews, Reddit threads, forum posts — and surfaced 13 patterns with 6 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Build an end-to-end content workflow that transforms measurement insights into production-ready AI-optimized articles

High impact · Large effort

Rationale

The product currently stops at visibility measurement when users need execution capability. Evidence shows that most market tools provide only visibility and users still rely on agencies to get things done. This gap explains why Pro and Enterprise tiers include content creation as a differentiator — teams choosing Starter struggle because they lack the internal expertise to produce AI-optimized content that meets precise parsing requirements.

The path from visibility score to citations requires markdown-based formatting, structured data, factual accuracy, and technical SEO implementation. Teams know their score is low but cannot bridge the execution gap without hiring outside help. Building guided workflows that generate compliant content from analysis insights would make the platform actionable for self-service users.

This directly addresses the core business metric of engagement and retention. Users who can act on insights will see results faster, validate the product's value, and stick around. Currently the product measures a problem users cannot solve on their own, which creates frustration and churn risk in the Starter tier.

Projected impact

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

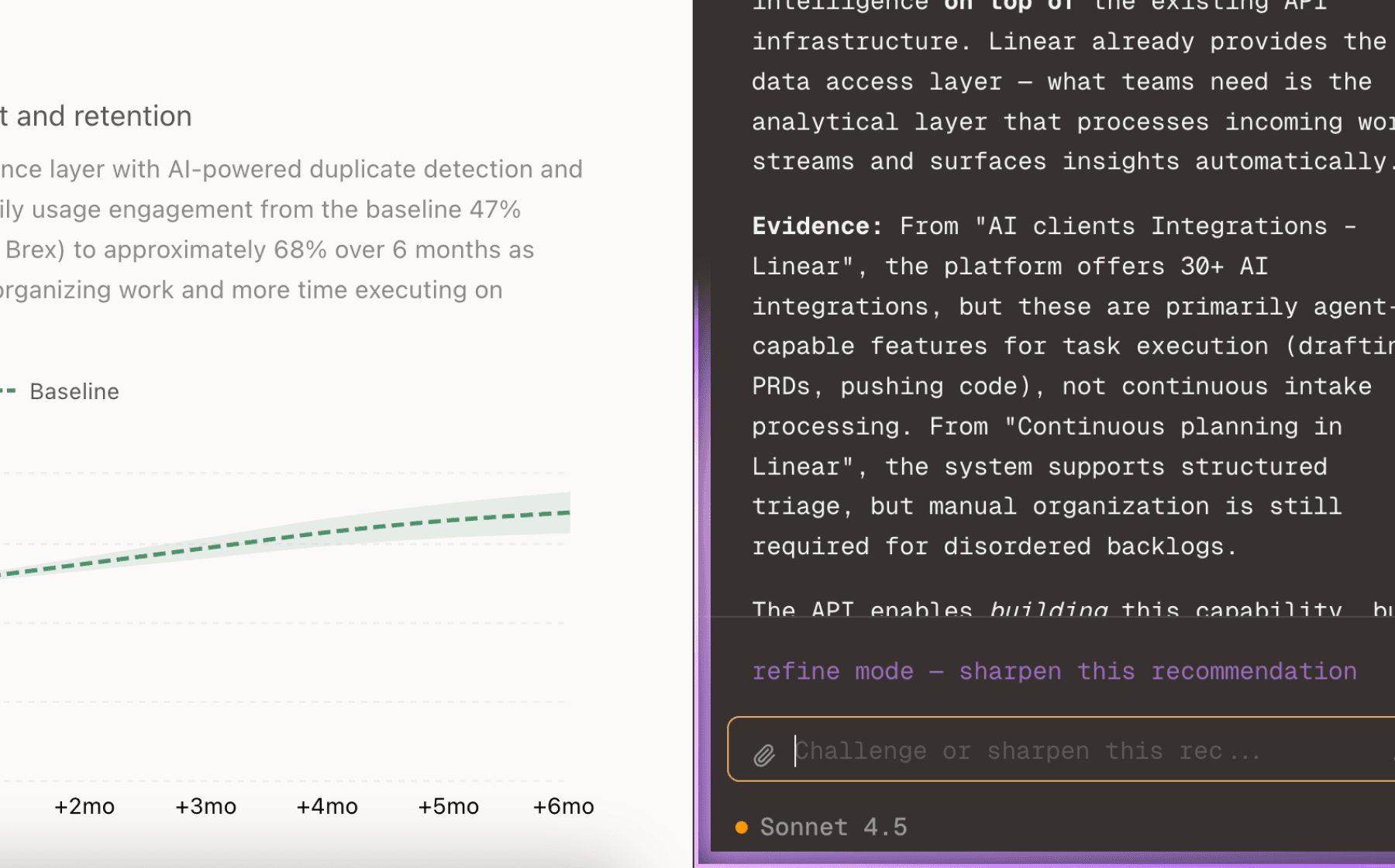

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

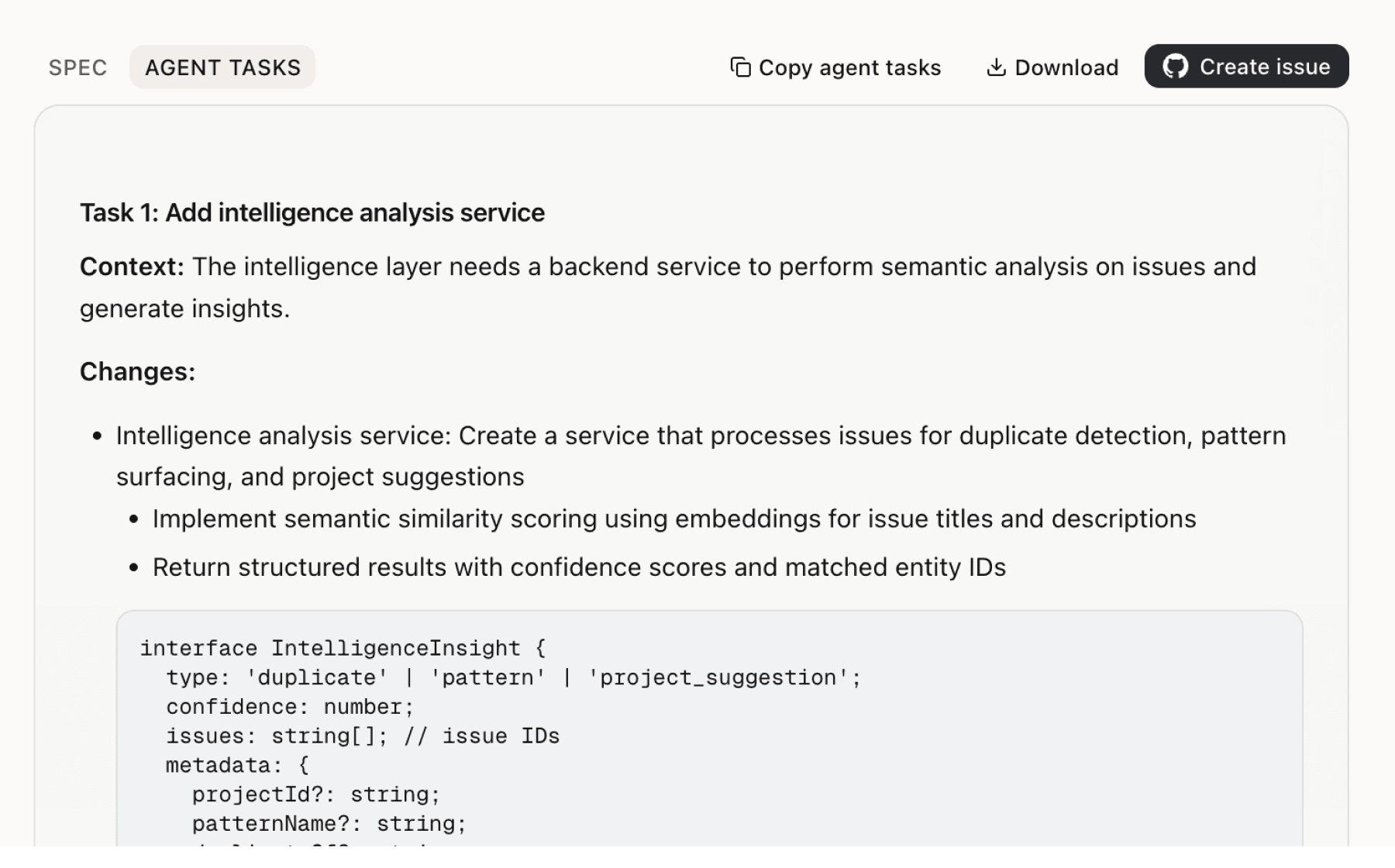

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

5 additional recommendations generated from the same analysis

Service effectiveness depends heavily on whether a business fits into knowledge categories that LLMs prioritize and whether their offering aligns with frequently asked questions. The product works best for tech startups in HR, IT, and AI sectors where this natural alignment exists. Companies outside these categories may pay for the service only to discover their domain has limited citation potential.

Citation outcomes depend on precise implementation of multiple technical steps — AI-optimized content creation, markdown routing, server accessibility, and crawler permissions. The product measures visibility but does not validate whether customers have correctly deployed the technical infrastructure required for AI crawlers to find and parse their content. Teams may see low visibility scores without understanding that the root cause is misconfigured routing or inaccessible markdown pages.

The three-tier structure creates confusion around who does what work. Starter expects teams to handle content creation themselves but lacks guidance on how to meet AI parsing requirements. Pro adds content writing but still positions as self-service with support rather than fully managed. Enterprise offers white-glove onboarding and specialists but is custom priced, which obscures the value proposition.

The market messaging correctly identifies that customers now get recommendations from AI rather than Google, which represents a fundamental platform shift in product discovery. However, many potential users do not yet understand the magnitude of this change or why AI citations matter for their business. The FAQ focuses on technical mechanics rather than strategic context.

The service explicitly disclaims performance guarantees while offering measurement and optimization tools. This creates an expectation mismatch — users pay for a service that tracks visibility but cannot promise results. Evidence shows that client outcomes vary based on industry competitiveness and product relevance to frequently asked AI questions, yet customers lack a reference framework for what success looks like in their specific context.

Insights

Themes and patterns synthesized from customer feedback

Service agreements include liability caps, non-refundable advance fees (except for material breaches), limited data retention post-termination, and late payment penalties. The company retains rights to use customer names in marketing unless explicitly objected to in writing.

“Company does not guarantee uninterrupted or error-free operation; will use commercially reasonable efforts to address material issues”

The product is backed by Peak XV, Base10, and Y Combinator, and trusted by tech leaders like Rippling, Rho, and Exa. Free reports and demo access allow prospects to evaluate fit before commitment.

“Product is trusted by multiple companies and backed by Peak XV, Base10, Y Combinator and other investors”

The product provides visibility analysis and optimization guidance but explicitly does not guarantee specific business, marketing, ranking, or performance outcomes. Users must understand that measurement and execution capability do not equal guaranteed results.

“Most of these tools are not actionable in nature; they are just providing visibility. One still has to rely on agencies to get things done.”

Meta descriptions and main content contain conflicting pricing information, which may confuse prospects evaluating plan options. This suggests outdated or conflicting data that should be reconciled.

“Pricing discrepancy between meta description and main content suggests outdated or conflicting pricing information”

The company uses cookies, error tracking (Sentry), and other technologies to collect user data for service delivery, product improvement, marketing, and fraud prevention. Data processing occurs in the United States with potential international transfers.

“Usage tracking occurs via cookies and similar technologies, including strictly necessary, functional, and analytical cookies (Sentry for error tracking)”

Monthly plans allow cancellation at billing end, while annual plans require 30 days' notice. Standard 14-day payment terms apply across all subscription models.

“Fees are exclusive of applicable taxes; billed in advance according to subscription plan (monthly or annually)”

Basic includes email only, Pro adds Slack access, and Enterprise provides a dedicated specialist with 24-hour SLA. Support differentiation reinforces the value proposition of higher tiers.

“Support channels include email for Basic, email and Slack for Pro, and dedicated specialist with 24h SLA for Enterprise”

Starter ($99–$300/mo) targets self-service teams, Pro ($299–$1,500/mo) adds professional content creation and support, and Enterprise includes white-glove onboarding and dedicated specialists. Tier selection depends on whether teams can handle implementation independently or need managed support.

“Tiered pricing structure with Basic ($99), Pro ($299), and Enterprise (custom) plans targeting different team sizes and use cases”

LLM citations require AI-optimized content that adheres to specific formatting requirements (markdown-based, structured data). Implementation complexity and the need for precise content adherence make this a critical success factor for visibility gains.

“The Prompting Company has a multi-step process including AI-optimized content creation that influences LLM outputs”

The service works best for tech startups and modern companies in HR, IT, and AI sectors where their offering clearly aligns with what LLMs know and what customers frequently ask. Misalignment between a company's domain and LLM knowledge categories reduces citation opportunity.

“The Prompting Company's primary clients are modern tech companies and startups in HR, IT, and AI sectors (e.g., Rippling, Retell AI)”

The service relies on third-party LLM providers (ChatGPT, Gemini, Perplexity, Claude, DeepSeek) for availability and performance, introducing dependencies outside the company's control. Changes to LLM behavior or access can directly impact service reliability and outcomes.

“Service relies on third-party LLM providers and external AI systems; availability and performance are outside company control”

ChatGPT, Gemini, and other LLMs have fundamentally shifted how customers discover products—moving away from search engines to AI-generated recommendations. Products must now optimize for AI citations and mentions to maintain market visibility and credibility.

“AI search is replacing traditional search, representing a major platform shift in how customers discover products”

The product successfully measures AI visibility across multiple LLM platforms through Visibility Scores, but most market solutions lack the actionability users need to actually improve their citations. Teams still require external help or internal expertise to move from measurement to results.

“Most tools in the market provide only visibility but lack actionability; users still need to rely on agencies to execute”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.