What Prolific users actually want

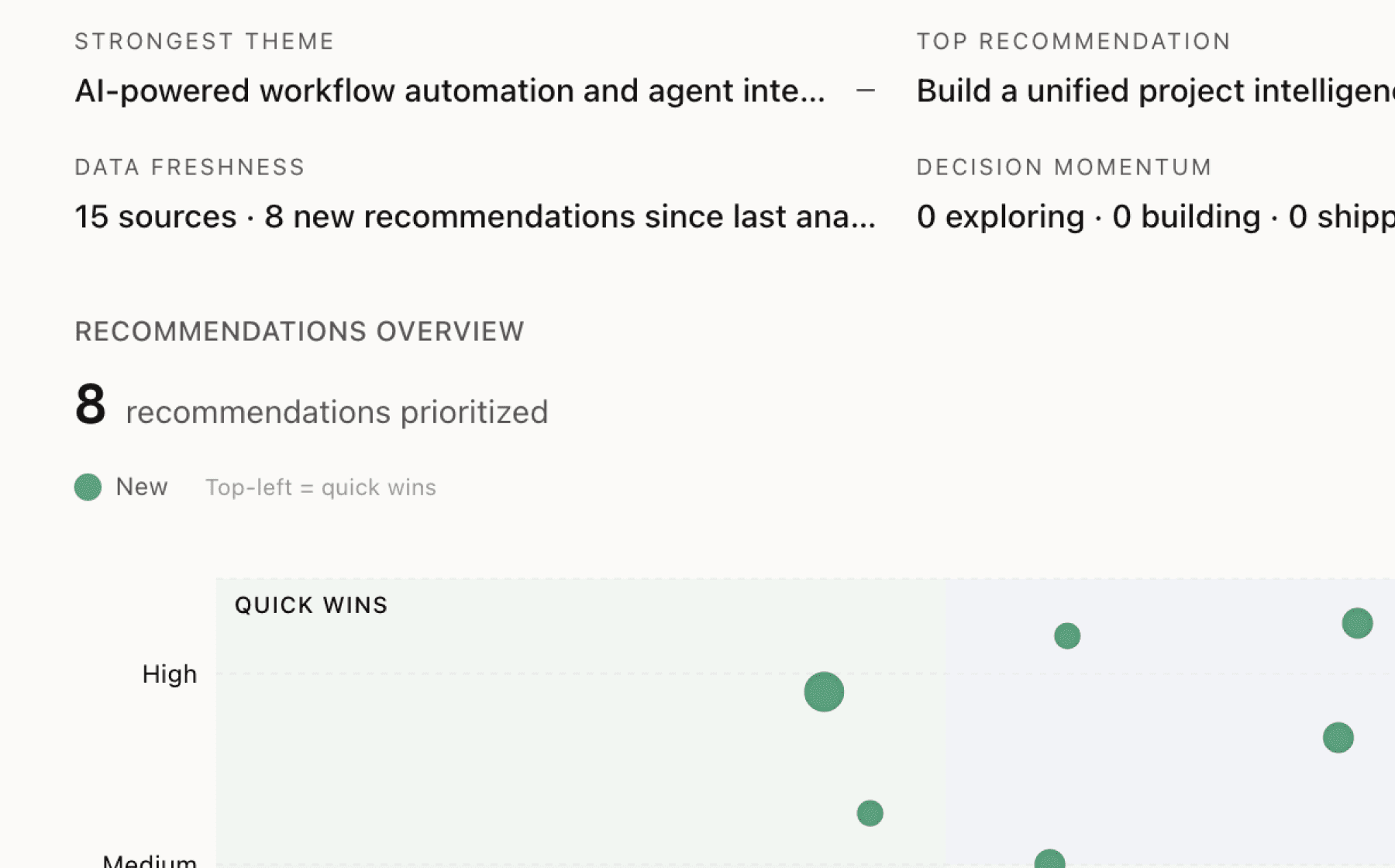

Mimir analyzed 15 public sources — app reviews, Reddit threads, forum posts — and surfaced 21 patterns with 7 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Create an interactive onboarding flow that demonstrates AI detection in action within the first session

High impact · Medium effort

Rationale

AI authenticity detection is the platform's most critical differentiator with measurable superiority (98.7% precision for AI-generated responses, 100% accuracy for bot detection). However, this capability remains abstract until users experience it firsthand. Data shows researchers are actively concerned about AI contamination in their studies, with the platform observing rising AI misuse across the industry.

An interactive onboarding that lets new users immediately see flagged responses, review the behavioral patterns detected, and understand the color-coded authenticity system would transform this technical feature into a visceral confidence builder. This directly addresses user anxiety about data validity while showcasing competitive advantage at the moment of highest engagement.

The evidence shows authenticity checks save researchers countless hours by automating what would otherwise be manual verification. Making this time-saving visible in the first session creates an immediate value anchor that drives retention and word-of-mouth growth among the target segments of product managers, founders, and engineering leads who are evaluating the platform.

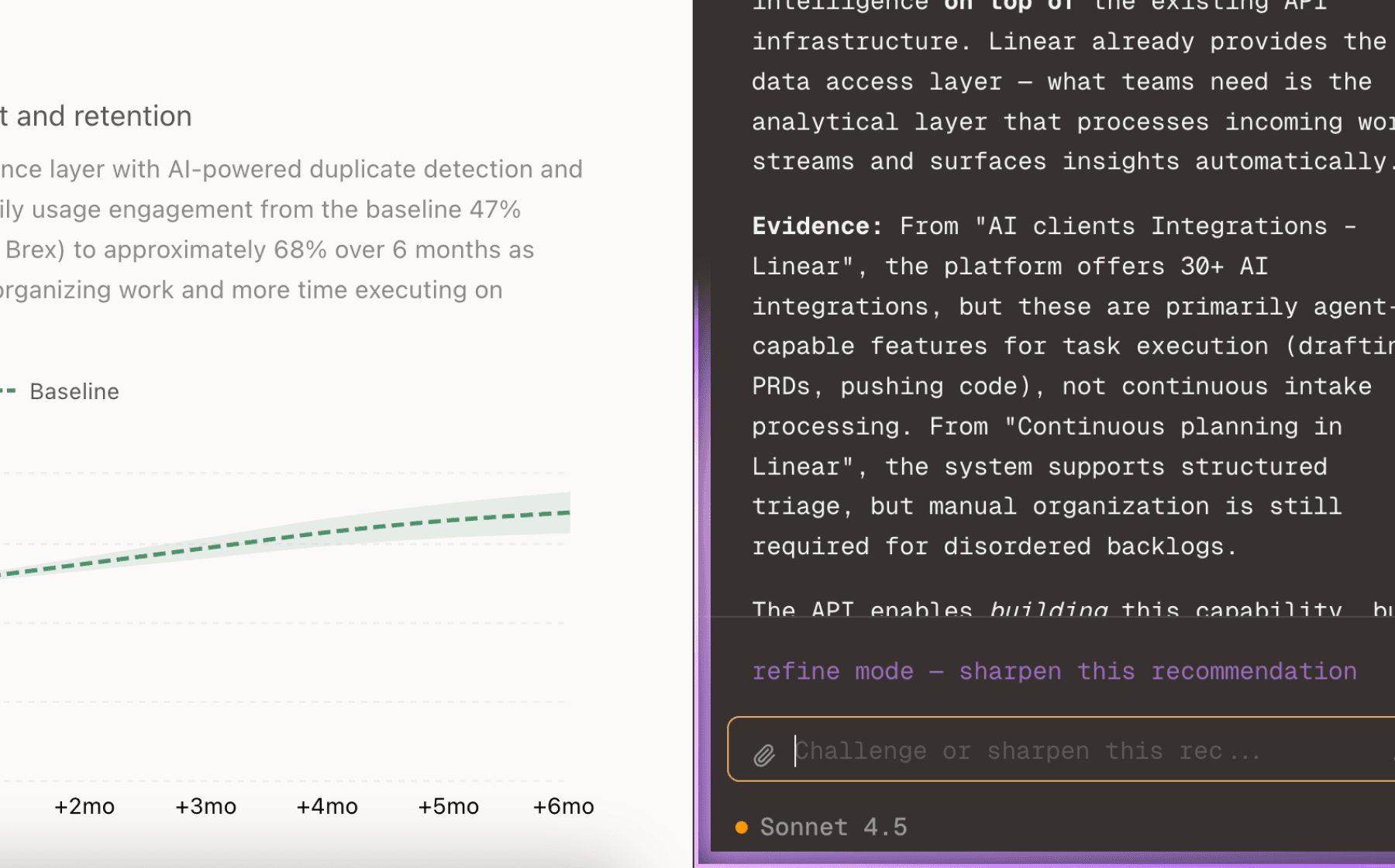

Projected impact

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

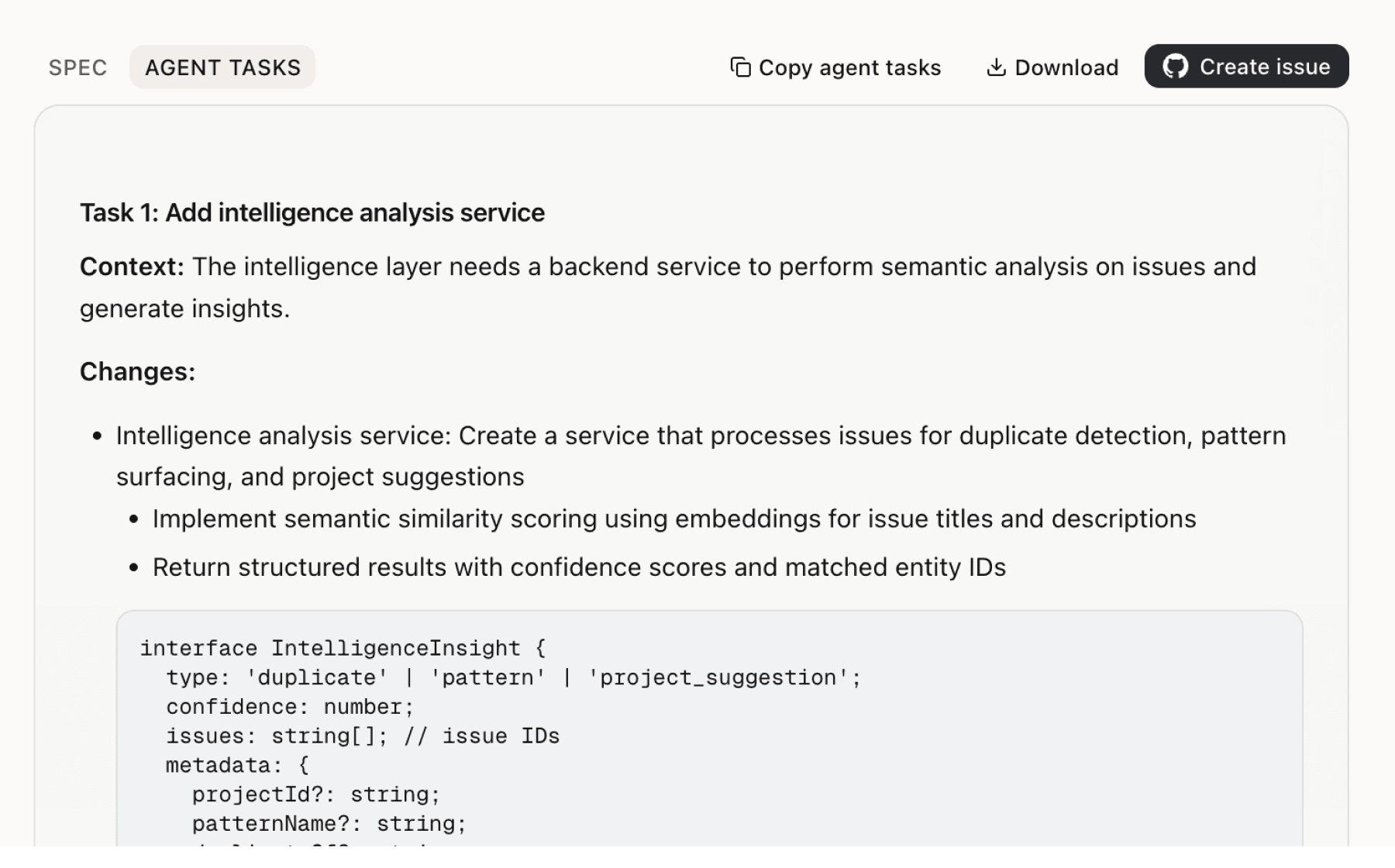

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

6 additional recommendations generated from the same analysis

Trust and transparency are foundational to the platform's identity, with a decade-long track record and explicit commitment to openness. Yet the 40+ verification checks, 0.6% false positive rate, and continuous fraud detection operate invisibly. Users evaluating the platform must take quality claims on faith rather than seeing live evidence.

The platform offers 300+ audience filters and full visibility into tasker expertise, yet users must configure filters blind without seeing who they'll actually reach. Evidence shows successful customers like Asana achieved precise audience customization, but the current experience requires users to guess at filter combinations and hope for matches.

The platform explicitly serves three distinct segments (AI/ML developers, academic researchers, participants) with different needs and success patterns. While comprehensive educational resources exist, users face a blank canvas when launching their first study. Evidence shows customers like AI2 reduced data collection from weeks to hours, but new users lack a clear path to replicate that success.

Authenticity checks automatically flag suspicious submissions with color-coded categorization (High/Low/Mixed), but users must then manually decide what to do with flagged data. The evidence shows flagged responses enable easy sorting and decision-making, yet there's no guided workflow to help users act on these flags efficiently.

The platform offers flexible pay-as-you-go pricing with no contract or setup costs, but users must calculate ROI themselves. Evidence shows customers like AI2 compressed weeks of work into hours, yet the financial value of that time saving remains implicit. The 42.8% corporate platform fee and 33.3% academic rate exist as isolated numbers without context for the alternative costs.

The platform runs 40+ verification checks per participant and maintains independent research showing consistently highest quality data versus competitors, but individual users have no visibility into how their specific studies perform against these standards. The Protocol quality monitoring system generates extensive data that currently remains internal.

Insights

Themes and patterns synthesized from customer feedback

Prolific's no-contract, pay-as-you-go model with differentiated pricing for corporate, academic, and non-profit users, combined with 15-minute verification and no setup costs, enables rapid user onboarding. This accessibility supports user acquisition across segments.

“Verification process takes 15 minutes, enabling quick onboarding from waitlist acceptance to earning”

Co-founded at Oxford University by researchers and collaborating with major AI organizations (Google, Hugging Face, Stanford, AI2), Prolific demonstrates research credibility and expertise. Multiple industry certifications (SOC 2, User Research Leader awards) reinforce market positioning.

“Platform was co-founded at Oxford University in 2014 by researchers for researchers, emphasizing credibility and research-first design”

Fast payment processing with $6 minimum threshold, instant PayPal transfers, and transparent processes contribute to 65% participant NPS. Fair compensation is integral to building a loyal, high-quality participant pool.

“New task published every 2 minutes with personalized eligibility filters to tailor tasks to participant profile”

Prolific emphasizes privacy notices, data transparency, auditability features, and protections against third-party sharing. These mechanisms help users maintain ethical compliance and build confidence with participants.

“Data security is emphasized with cloud storage and explicit commitment to not sharing data with third-party advertisers”

Data quality and accessibility are central to Prolific's identity and mission of 'building a better world with better data.' This alignment supports long-term user engagement and positions the platform as trustworthy.

“Data is at the centre of Prolific's business model and core values”

Prolific offers managed services including human evaluations, SME verification, and rubric design to measure capability and safety. Human evaluators are carefully profiled using demographic, behavioral, and domain-level verification.

“Offering evaluation and verification capabilities including human evaluations, SME verification, and rubric design to measure capability, safety, and quality.”

The platform maintains a robust content hub with webinars, articles, case studies, and whitepapers covering ethics, AI training, data quality, and best practices. This continuous learning infrastructure supports user engagement and retention.

“Recent content publishing cadence shows active updates in November-December 2025 with multiple product announcements”

Prolific explicitly serves three distinct user segments—AI/ML developers, academic researchers, and participants—with tailored messaging, case studies, and support infrastructure including dual help centers. This targeted approach improves user fit and retention across segments.

“Platform serves three distinct user segments: AI/ML developers, academic researchers, and participants seeking paid research opportunities”

Prolific emphasizes six core research ethics principles, ensures voluntary task exit, and provides legal transparency. This builds confidence among both researchers and participants while supporting ethical compliance.

“Ethical research principles protect participants' rights and privacy while strengthening data quality and building trust in findings.”

With 300+ audience filters and visibility into participant expertise, Prolific enables researchers to find specialized respondents—including domain experts, AI taskers, and demographics with specific characteristics. This precision directly improves research outcomes and user satisfaction.

“Asana enabled precise audience customization with advanced screening for future of work research”

Trusted enterprise customers including Google, Stanford, Hugging Face, and NIH validate product-market fit and competitive positioning. This credibility supports user acquisition and retention through demonstrated reliability.

“Trusted by thousands of organizations including Google, Stanford, Hugging Face, and Asana, indicating strong market validation”

The platform enables dramatically accelerated data collection—hours instead of weeks for AI projects, with 2-hour average response times and 15-minute study setup. This addresses friction from traditional outsourcing and enables users to keep pace with development needs.

“Outsourcing slows down AI development cycles”

Prolific positions itself as the human intelligence layer for frontier AI, providing preference data, alignment signals, and rigorous human judgment for training and evaluating AI models. This addresses a growing market need from AI labs and enterprises.

“Prolific positions itself as 'the human intelligence layer for frontier AI' offering scientifically rigorous training signals to replace opaque data providers.”

Prolific demonstrates measurably better sample quality compared to competitors like Cint through faster completion times, higher attention/comprehension test performance, longer responses, and sub-1% dropout rates. This quality advantage directly drives user retention.

“Quality concerns with Cint samples - respondents complete studies faster, perform poorly on attention/comprehension tests, give shorter answers, less likely to answer honestly”

The platform provides APIs and direct integrations with research tools like Qualtrics, Labvanced, and Useberry, enabling users to scale projects efficiently without leaving existing workflows. This removes integration friction and supports operational efficiency.

“API automation available for scaling data collection projects of various sizes”

With 200,000+ active participants spanning 40+ countries, the platform enables researchers to access diverse global perspectives and conduct international studies. Case studies demonstrate successful research across 70+ countries.

“Platform enables global participation, with case study showing data collection from 70+ countries for AI governance research”

The platform provides unified infrastructure for agentic testing, safety testing, red teaming, and evaluation frameworks designed specifically for AI labs building frontier systems. This specialized capability addresses enterprise AI development needs.

“Agentic and safety testing to test agent behavior, tool use, multi-step workflows, and conduct targeted red teaming to uncover vulnerabilities.”

Prolific implements 40+ verification checks per participant, continuous fraud detection, and in-study quality controls that automatically flag suspicious submissions. These mechanisms ensure consistent delivery of authentic, high-quality data.

“Researchers struggle to distinguish between genuine human responses and AI-generated or AI-assisted content in studies.”

Prolific provides advanced, multi-layered systems to detect AI-generated responses, bot activity, and fraud with high precision (98.7% for AI detection, perfect accuracy for bots). This directly protects research validity and user confidence in data quality, a critical factor for retention.

“Need for tools that detect AI-generated content in research responses with high accuracy and low false positive rates.”

The platform maintains 200,000+ rigorously vetted participants (35+ verification checks including bank-grade ID verification) enabling recruitment in minutes rather than weeks. This core capability directly accelerates user workflows and drives engagement.

“Prolific enables collection of high-quality human data in minutes, positioning itself as a replacement for opaque data providers”

Prolific's commitment to transparency, ethics, and responsible practices—including Belmont Report adherence, SOC 2 compliance, and a decade-long track record—differentiates it in the market and supports long-term user retention. This foundation extends to fair participant compensation and protection.

“Participants can trust they will be treated fairly and with respect on the platform”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.