What Nexus users actually want

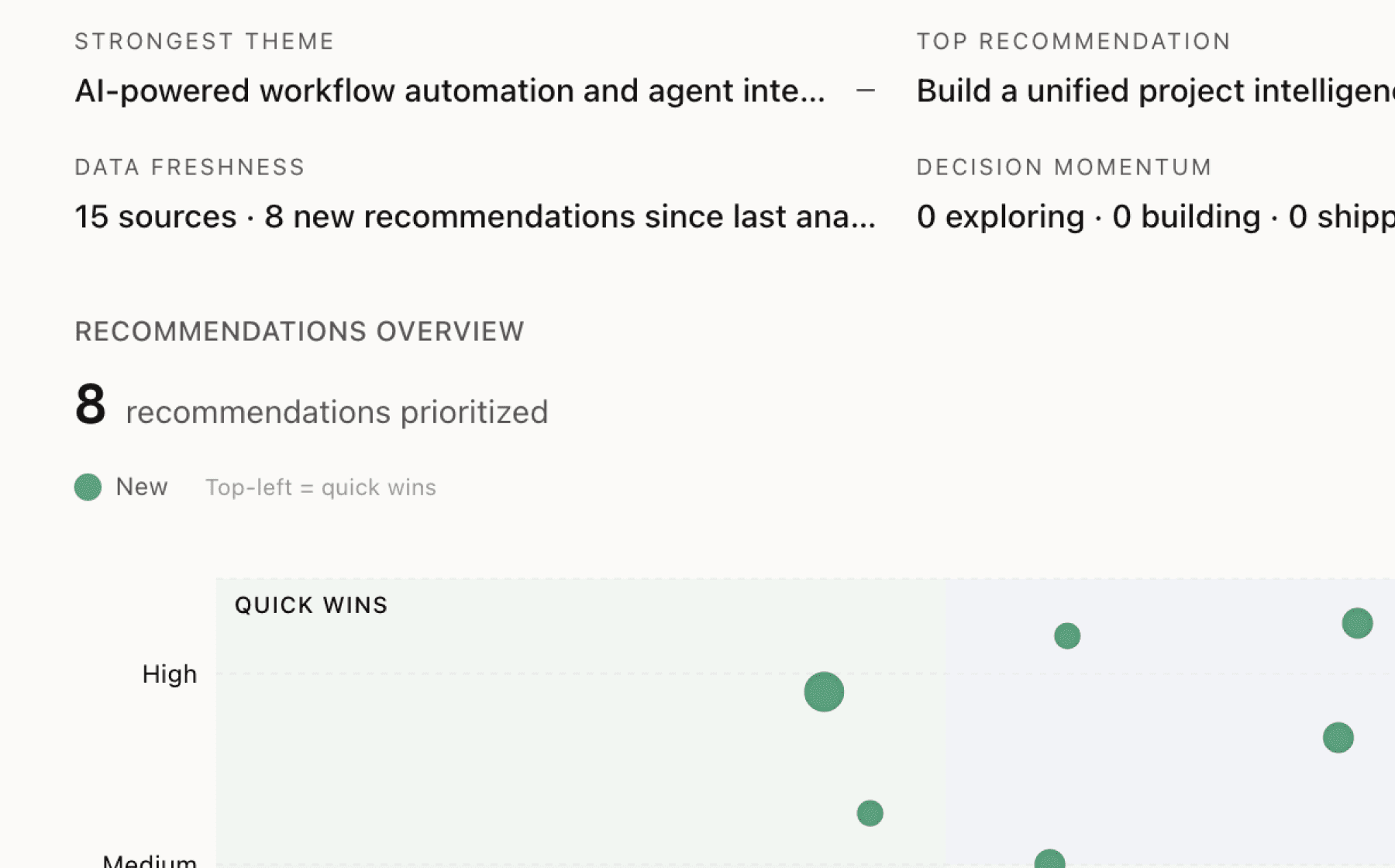

Mimir analyzed 10 public sources — app reviews, Reddit threads, forum posts — and surfaced 16 patterns with 8 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Build compliance and explainability features as core product differentiators to unlock enterprise markets

High impact · Large effort

Rationale

Compliance has shifted from a cost center to a competitive moat that directly determines market access. Companies with compliance built from day one can sell to regulated industries immediately while competitors face 18-month retrofitting cycles. With compliance expenses exceeding development budgets by 229% and SOC 2 becoming a de facto requirement for B2B AI contracts, enterprises won't even begin evaluations without these capabilities.

The evidence shows this is no longer optional. EU AI Act fines reach €35 million or 7% of global annual turnover, and regulations now apply extraterritorially to any system serving EU users. ISO 42001 has become the standard for enterprise AI governance, and enterprise customers explicitly require explainability, human oversight on high-risk decisions, and data governance frameworks before signing contracts.

This recommendation addresses the root barrier preventing enterprise adoption. Build audit trails, decision explainability, human approval workflows for high-risk actions, and data governance controls into the core product architecture. Position these not as compliance checkboxes but as features that make AI systems safer and more trustworthy. This will differentiate Nexus in enterprise sales cycles and eliminate the primary objection blocking contract signatures.

Projected impact

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

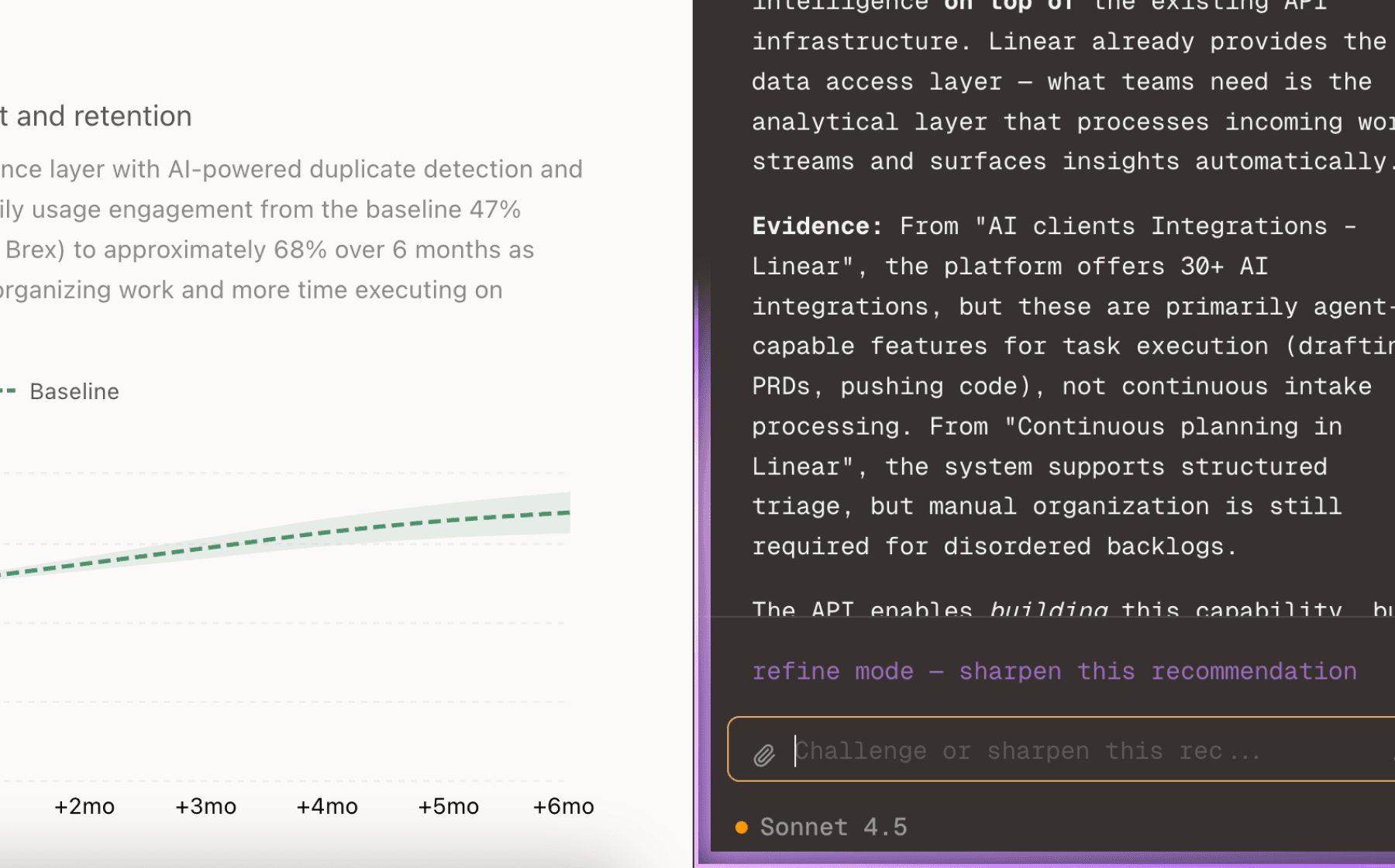

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

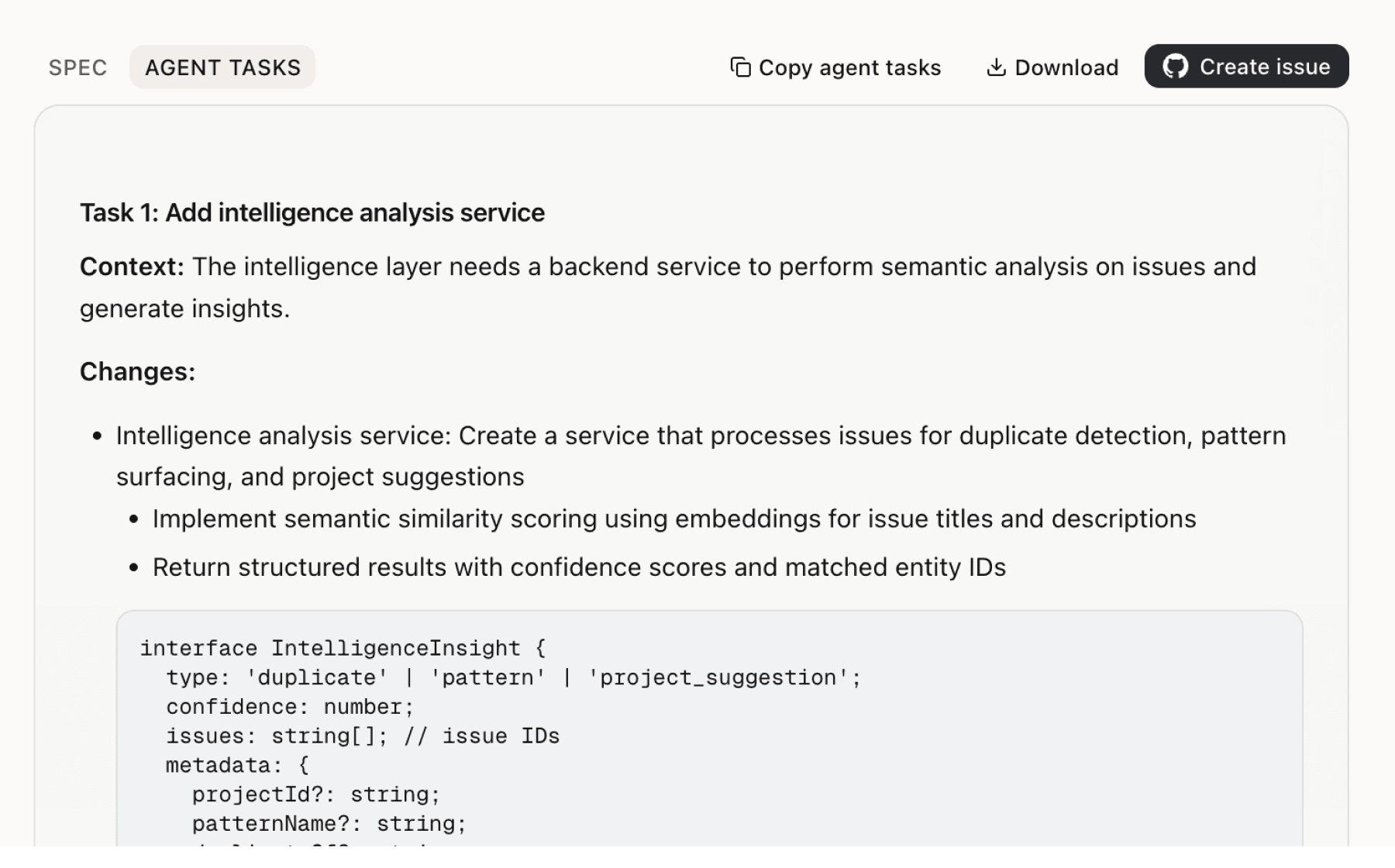

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

7 additional recommendations generated from the same analysis

Full autonomy AI systems fail 86% of the time, but human-in-the-loop implementations quadruple productivity. The top 5% of companies reporting AI returns use human oversight, not full automation. This is not about limiting AI capability but about building reliability into systems that handle edge cases and prevent catastrophic failures.

Organizations that redesign workflows before selecting models see 2x better returns, yet 95% of enterprises start with technology selection instead of process redesign. The 5% succeeding with AI are not using better models or spending more money—they are using AI better by redesigning end-to-end workflows first. This suggests Nexus should embed process redesign methodology directly into the product rather than assuming users arrive with optimized workflows.

Data integration costs 3x more than the AI technology itself, yet 96% of enterprises start AI projects without adequate data infrastructure. With 80% of business-critical information in unstructured formats and poor data quality costing organizations significantly, inadequate data preparation is the hidden reason AI projects fail. Organizations cite data quality and completeness as the primary barrier to AI success, not model capability.

The platform already enables deployment in days versus months, but 95% of GenAI pilots still fail to deliver measurable business impact because demos work on curated data while production encounters messy real data and edge cases. The evidence shows pilots prove concepts work but fail to scale, and 42% of companies have abandoned most AI initiatives. This gap between pilot success and production failure represents a retention risk—users experience initial success but fail to realize business value.

AI models have converged at similar performance levels with single-digit percentage gaps, and OpenAI's unsustainable unit economics (spending $1.69 per dollar of revenue) suggest the model innovation race is reaching diminishing returns. GPT-5.2 received underwhelming reviews despite higher benchmark scores, and researchers note that autoregressive LLMs generalize dramatically worse than people. The competitive advantage has shifted from model selection to integration capabilities and workflow design.

Explainability is both a compliance requirement (Theme 0) and a trust requirement for human-in-the-loop systems (Theme 1). Users need to understand why an agent is escalating a decision, why it chose a particular path, or why it is uncertain. Without this transparency, users cannot effectively provide oversight or learn to trust the system.

The platform already commits to not retaining workspace data for model training, but the privacy policy reveals standard data collection practices (names, emails, billing addresses) and acknowledges no guarantee of 100% security. With compliance being a market gate (Theme 0) and data governance being an explicit enterprise requirement, this area needs stronger messaging and controls.

Insights

Themes and patterns synthesized from customer feedback

Platform collects personal information (names, emails, phone numbers) for account management, service delivery, and analytics, with payment data stored separately by Stripe. Users expect data protection compliance and transparency around data usage, particularly given the emphasis on enterprise data governance in AI systems.

“Payment data is stored by Stripe, not retained by Nexus”

Platform reserves right to suspend accounts, remove content, and report harmful behavior to authorities, with non-refundable purchasing and age restrictions (18+). Clear governance and account safety policies establish user trust and mitigate platform risk.

“Services are restricted to users 18 years or older; persons under 18 are not permitted to use or register.”

Platform supports 3,000+ plugins plus custom API support, enabling teams to integrate with diverse systems without being locked into a single vendor ecosystem. This flexibility reduces switching costs and risk for enterprise adoption.

“Nexus supports 3,000+ plugins plus custom API support with no vendor lock-in”

Nexus retains all intellectual property rights in platform content, source code, and trademarks, with periodic policy updates and notification to users. Clear IP and policy frameworks prevent user confusion and establish platform stability.

“All intellectual property rights in Services content, source code, databases, and trademarks are owned by Nexus Enterprises.”

Platform uses cookies and tracking technologies for analytics and user behavior analysis, with opt-out capability for location tracking. Analytics data informs product improvements and user experience optimization.

“Company uses cookies and similar tracking technologies to collect information”

LLM models show diminishing returns with higher benchmark scores delivering underwhelming user value; OpenAI loses $1.69 per dollar of revenue while companies abandon AI initiatives (42% up from 17%). Enterprise failures stem from integration and workflow complexity, not model capability, indicating the market has shifted from model innovation to integration architecture.

“GPT-5.2 received 'underwhelming' and 'mixed bag' reviews despite higher benchmark scores, indicating a disconnect between model capabilities and perceived user value.”

Neither agent-first nor workflow-first approaches alone achieve the balance enterprises need for production reliability. The optimal architecture combines the reliability of structured workflows with the flexibility of AI agents that adapt throughout execution, preventing the brittleness that causes RPA and rigid systems to break when processes change.

“Platform claims to solve reliability vs flexibility trade-off that exists in most agentic systems”

AI initiatives should be tied to measurable business outcomes (revenue, conversion, cost reduction) rather than technical AI performance metrics. Teams that measure results and iterate based on business impact avoid the common pitfall of pursuing technical excellence while failing to deliver business value.

“Tie AI initiatives to measurable business outcomes (revenue, conversion, cost reduction) rather than technical AI performance metrics”

Current automation fails when scenarios don't match templates, routing work back to teams and defeating automation's purpose. Agents need adaptive intelligence to reshape their approach when hitting unexpected cases, improving both user trust and operational efficiency.

Full autonomy AI systems fail 86% of the time due to inability to handle edge cases, inability to adapt when scenarios don't match templates, and bias in critical applications. Human-in-the-loop approaches quadruple productivity and are the only viable path to reliable, fair automation that keeps humans in control.

“When scenarios don't fit templates in standard automation, work routes back to teams, defeating automation's purpose”

Enterprises now require AI vendors to provide explainability, human oversight, data governance, and certifications (SOC 2, ISO 42001, EU AI Act); compliance costs exceed development budgets by 229%. Companies with strong compliance frameworks built from day one can access regulated industries immediately; others face 18 months of retrofitting, making compliance a market differentiator and barrier to entry.

“Regulations are forcing AI companies to build what enterprise customers actually needed but were too polite to demand: transparency, explainability, human oversight, and data protection.”

95% of enterprises fail because they start with technology instead of process redesign; the 5% succeeding focus on workflow and integration architecture first, not technology or spending. When organizations redesign end-to-end workflows before selecting models, they see 2x better returns and avoid building automation around brittle, inflexible processes.

“Model convergence is happening—the competitive advantage is shifting from model selection to integration capabilities”

Data integration costs 3x more than AI technology itself, and 96% of enterprises start AI projects without proper data readiness. With 80% of business-critical information in unstructured formats and poor data quality cited as a primary failure cause, inadequate data infrastructure planning is the core reason enterprises fail at AI, not model selection.

“Data integration costs 3x more than the AI itself”

95% of GenAI pilots fail to deliver measurable business impact because they use curated data while production encounters messy real data and edge cases. Organizations repeatedly run pilots without learning or scaling insights, causing the high abandonment rate (42% of companies abandoned most AI initiatives) and revenue leakage despite $40B spending.

“95% of GenAI pilots fail to deliver measurable P&L impact”

Nexus enables AI agent deployment in days versus months for custom solutions, with dramatic cost savings (100x less) and measurable ROI within 2 weeks, as demonstrated by enterprise customers generating millions in yearly revenue. This speed-to-production directly impacts business agility and user engagement by delivering tangible outcomes quickly.

“Orange customer achieved onboarding conversion rate improvement from Nexus implementation”

Users require the ability to create, modify, and test AI agents directly without engineering involvement, enabling rapid iteration on use cases and response to business needs. This is foundational to the product's core value proposition and directly impacts user engagement and retention.

“Platform enables business teams to transform workflows into autonomous agents without engineering involvement”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.