What Litmus users actually want

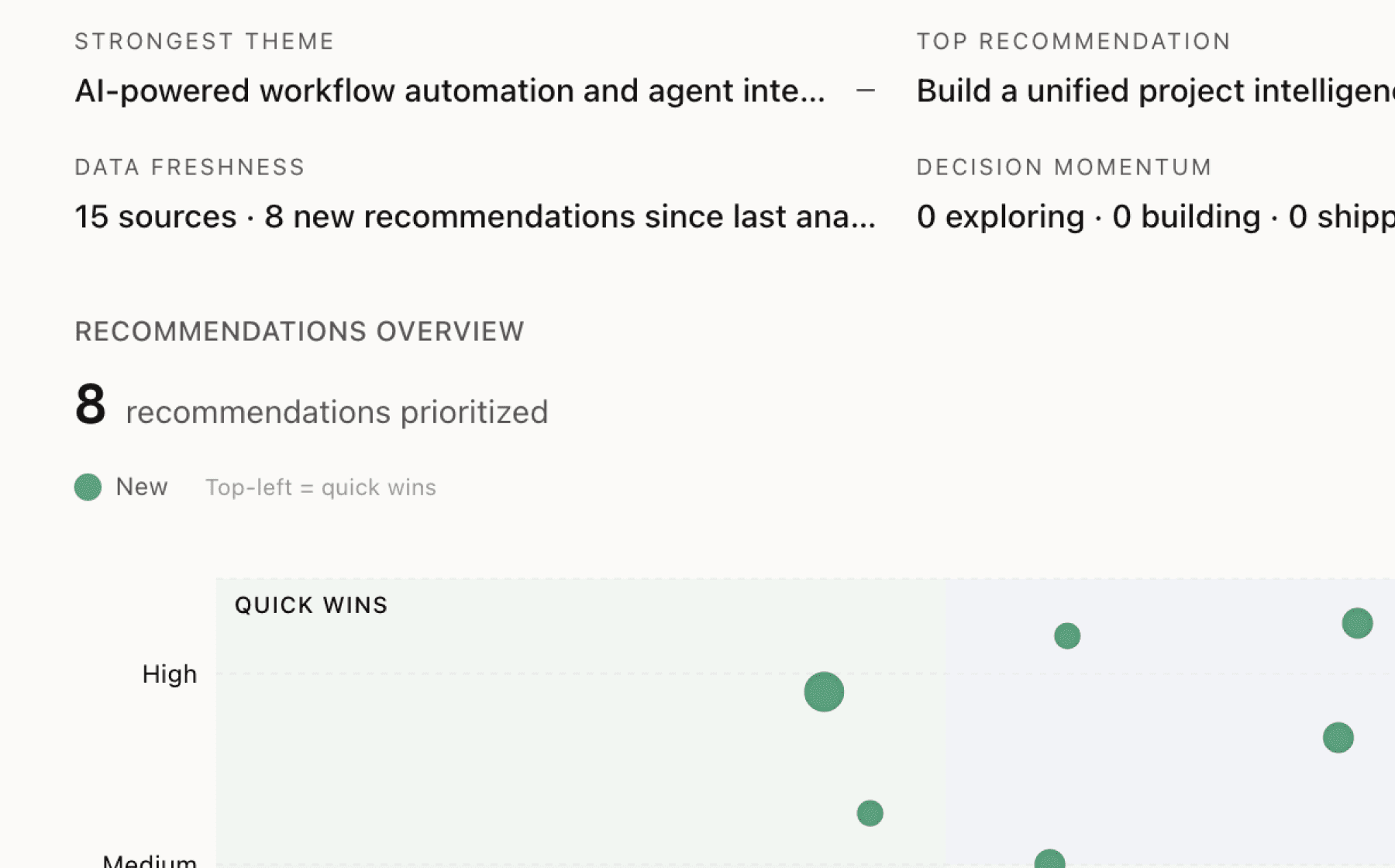

Mimir analyzed 1 public source — app reviews, Reddit threads, forum posts — and surfaced 6 patterns with 6 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Build a dashboard showing time-to-hire metrics and hours saved per assessment

High impact · Medium effort

Rationale

The core value proposition centers on giving engineering time back to product work, yet users have no way to see this impact quantified. Three sources emphasize time savings as the fundamental business case, but without measurement, teams can't demonstrate ROI to leadership or optimize their hiring process.

Create a simple dashboard that tracks assessment generation time, candidate evaluation throughput, and estimated hours saved compared to traditional interview processes. Show concrete numbers like "Generated 3 assessments in 12 minutes (vs. 2-3 days manual creation)" and "Evaluated 8 candidates in parallel without team involvement." This turns an abstract promise into visible proof.

This directly addresses the open question of "how fast we can help people hire" by making speed improvements concrete and shareable. For early-stage startups where every engineering hour counts, quantified time savings become the primary reason to choose Litmus over building assessments manually.

Projected impact

Implementation spec

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

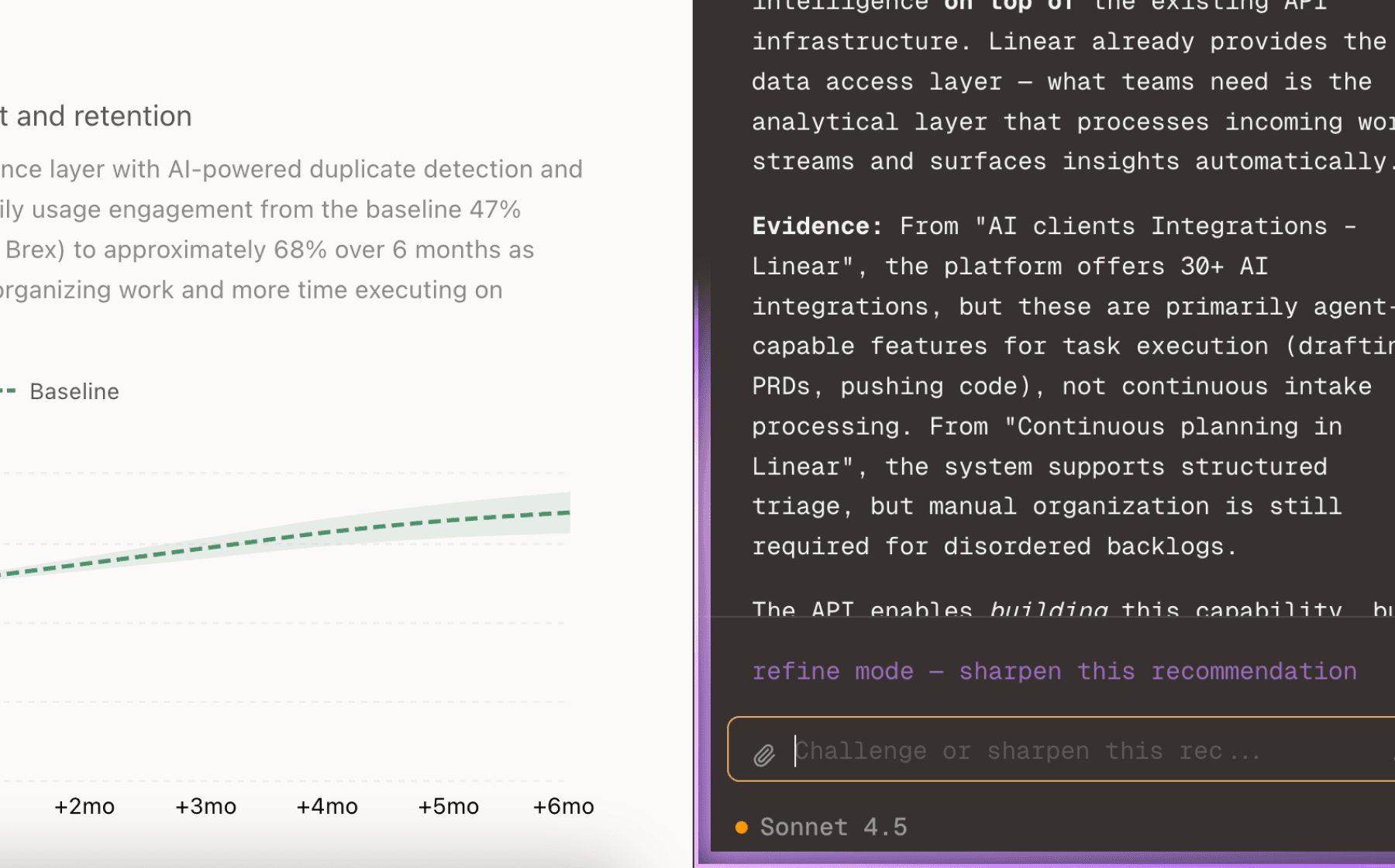

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

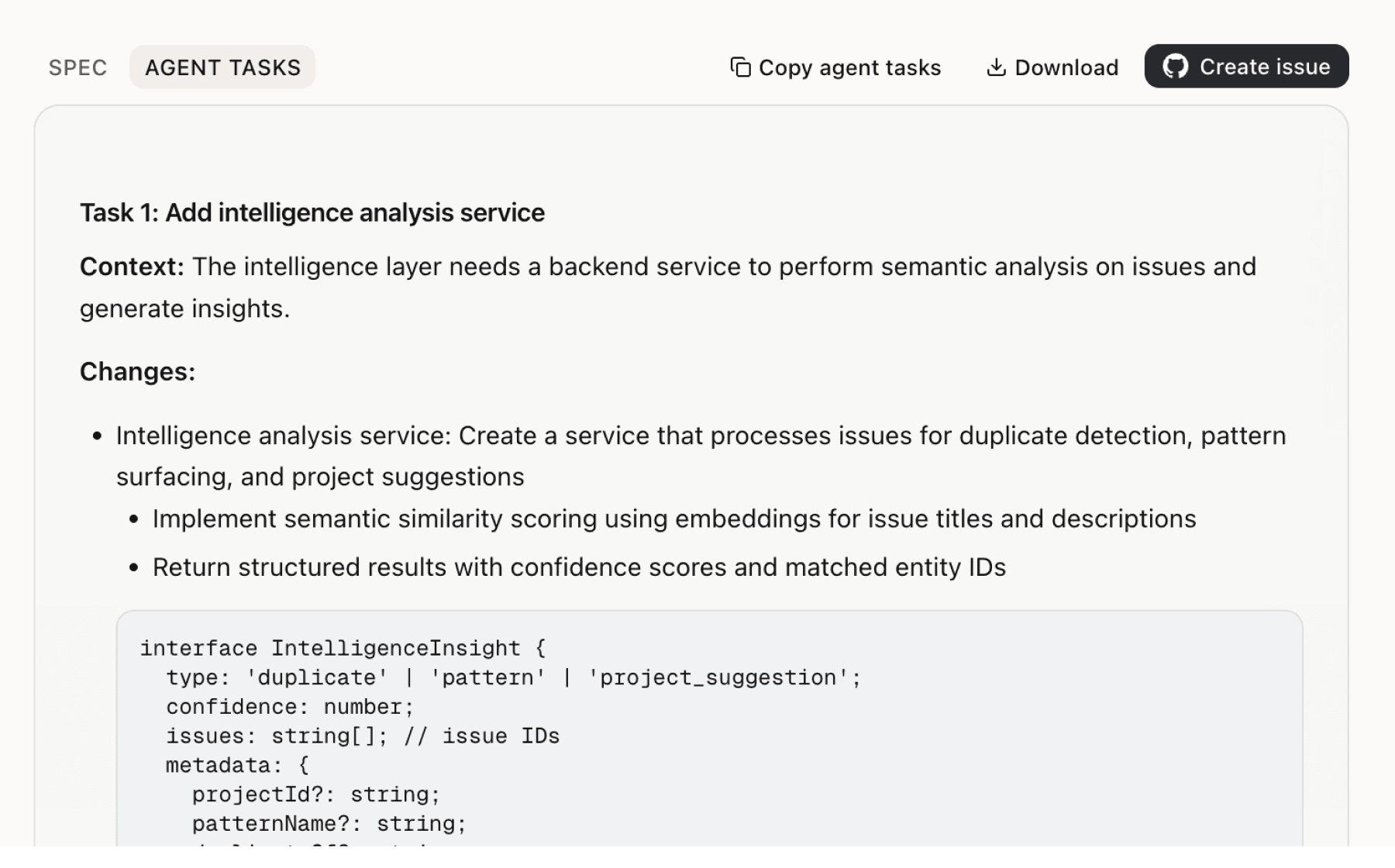

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

5 additional recommendations generated from the same analysis

Users see "how candidates actually code" but lack tools to efficiently compare that code against their own engineering standards. The platform surfaces commit history and code quality, but hiring managers still face the cognitive load of mentally mapping candidate work to their codebase conventions.

AI generates assessments from natural language requirements, but users start from scratch each time rather than learning from their own successful hires. Early-stage startups benefit most when they can quickly replicate what's worked before.

The platform surfaces commit history and code submissions, but candidates' actual problem-solving process remains opaque. Hiring teams want to see how candidates think through problems, not just the final result.

Litmus optimizes the hiring team experience but leaves candidates in the dark between assessment submission and results. For a platform emphasizing realistic work environments and respect for engineering time, the candidate experience gap undermines the brand promise.

Litmus automates assessment creation and evaluation but leaves teams to manually convert insights into interview questions. The time savings promise breaks down at the handoff between take-home and live interview.

Insights

Themes and patterns synthesized from customer feedback

Candidates complete assessments in their preferred IDE with time limits, creating conditions closer to real work than traditional whiteboard or controlled platform environments. This ecological validity strengthens the predictiveness of assessment results.

“Candidates work in their preferred IDE and submit solutions within a time limit, simulating real work environment”

Litmus provides centralized hiring management within a single platform, eliminating context-switching between multiple tools that can fragment the hiring process and add friction.

“Centralized hiring management to avoid switching between multiple tools”

The platform surfaces candidate thinking patterns, code quality, and engineering decisions through observable assessments and analysis tools, moving beyond binary pass/fail decisions. Users gain visibility into commit history, code submissions, and AI-generated analysis to inform hiring confidence.

“Platform provides visibility into candidate code quality, commit history, and AI-generated analysis of submissions”

Users can describe assessment needs in plain English and provide codebase context, with AI generating complete take-home assessments that can be iteratively refined. This removes the manual burden of assessment creation while maintaining customization.

“Users can describe assessment requirements in plain English and optionally link repositories or upload documents for context”

Litmus reduces time spent on hiring by automating assessment generation and evaluation, addressing the core constraint that every interview hour diverts engineering teams from product work. The platform generates assessments in minutes rather than days and enables parallel candidate evaluation without team burnout.

“Every hour you spend interviewing is an hour you're not building.”

Litmus replaces generic coding problems with assessments based on company-specific codebases, providing clear signal about how candidates actually code rather than relying on algorithm memorization. This shift from ambiguous hiring signals to concrete evidence of engineering capability is a core differentiator.

“No more guessing. No more generic problems. Just real engineering work that tells you what you need to know.”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.